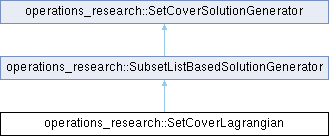

Definition at line 50 of file set_cover_lagrangian.h.

|

| | SetCoverLagrangian (SetCoverInvariant *inv) |

| | SetCoverLagrangian (SetCoverInvariant *inv, const absl::string_view name) |

| SetCoverLagrangian & | UseNumThreads (int num_threads) |

| bool | NextSolution (absl::Span< const SubsetIndex > _) final |

| ElementCostVector | InitializeLagrangeMultipliers () const |

| SubsetCostVector | ComputeReducedCosts (const SubsetCostVector &costs, const ElementCostVector &multipliers) const |

| SubsetCostVector | ParallelComputeReducedCosts (const SubsetCostVector &costs, const ElementCostVector &multipliers) const |

| ElementCostVector | ComputeSubgradient (const SubsetCostVector &reduced_costs) const |

| ElementCostVector | ParallelComputeSubgradient (const SubsetCostVector &reduced_costs) const |

| Cost | ComputeLagrangianValue (const SubsetCostVector &reduced_costs, const ElementCostVector &multipliers) const |

| Cost | ParallelComputeLagrangianValue (const SubsetCostVector &reduced_costs, const ElementCostVector &multipliers) const |

| void | UpdateMultipliers (double step_size, Cost lagrangian_value, Cost upper_bound, const SubsetCostVector &reduced_costs, ElementCostVector *multipliers) const |

| void | ParallelUpdateMultipliers (double step_size, Cost lagrangian_value, Cost upper_bound, const SubsetCostVector &reduced_costs, ElementCostVector *multipliers) const |

| Cost | ComputeGap (const SubsetCostVector &reduced_costs, const SubsetBoolVector &solution, const ElementCostVector &multipliers) const |

| void | ThreePhase (Cost upper_bound) |

| std::tuple< Cost, SubsetCostVector, ElementCostVector > | ComputeLowerBound (const SubsetCostVector &costs, Cost upper_bound) |

| bool | NextSolution () final |

| bool | NextSolution (const SubsetBoolVector &in_focus) final |

| | SubsetListBasedSolutionGenerator (SetCoverInvariant *inv, SetCoverInvariant::ConsistencyLevel consistency_level, absl::string_view class_name, absl::string_view name) |

| bool | NextSolution (absl::Span< const SubsetIndex > _) override |

| bool | NextSolution () final |

| bool | NextSolution (const SubsetBoolVector &in_focus) final |

| | SetCoverSolutionGenerator (SetCoverInvariant *inv, SetCoverInvariant::ConsistencyLevel consistency_level, absl::string_view class_name, absl::string_view name) |

| virtual | ~SetCoverSolutionGenerator ()=default |

| void | SetName (const absl::string_view name) |

| SetCoverInvariant * | inv () const |

| virtual SetCoverSolutionGenerator & | ResetLimits () |

| SetCoverSolutionGenerator & | SetMaxIterations (int64_t max_iterations) |

| int64_t | max_iterations () const |

| SetCoverSolutionGenerator & | SetTimeLimitInSeconds (double seconds) |

| absl::Duration | run_time () const |

| double | run_time_in_seconds () const |

| double | run_time_in_microseconds () const |

| std::string | name () const |

| std::string | class_name () const |

| Cost | cost () const |

| bool | CheckInvariantConsistency () const |